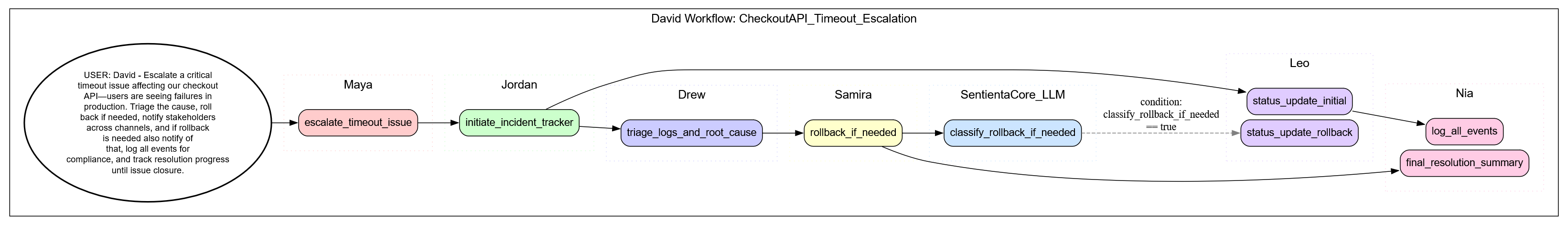

Connecting Sentienta Agents to APIs for Smarter Business Workflows

Accessing real-time and external data is essential for many business tasks, from market research to operational monitoring. Sentienta enables users to connect to external data sources through APIs, allowing agents to enhance their responses with up-to-date information.

This post examines two primary ways to achieve this within Sentienta: configuring a static API URL when creating an agent, and using the new Agent Marketplace agent, Ben, for flexible, on-demand data retrieval via natural language queries. Both methods help teams integrate API-driven insights directly into their workflows with minimal technical overhead.

Method 1: Adding an API URL When Creating an Agent

Sentienta allows users to connect agents directly to external APIs by specifying a fixed URL at the time of agent creation. This approach is best suited for situations where the API endpoint and its required parameters do not change frequently.

Example:

Consider setting up an agent using the “List of Free Public APIs” (e.g., https://www.freepublicapis.com/api/apis?limit=10&sort=best). By entering this URL in the agent’s configuration, the agent can retrieve and display up-to-date lists of publicly available APIs upon request. This empowers users to quickly find additional data sources relevant to their needs without searching manually.

- Setup: Create a new agent, add the “List of Free Public APIs” endpoint as the agent’s default URL.

- Result: When a user asks the agent for available APIs, the agent queries the service and returns relevant results.

This method is particularly effective for routine queries where the required data source remains constant and access is straightforward.

Note that that all necessary parameters must be included when adding the URL to the agent’s description. (For example, some websites require the calling agent to provide a User-Agent string or other parameters identifying the application).

Method 2: Using the Ben Agent for Flexible, On-Demand API Access

The ‘Ben’ agent, available in the Agent Marketplace section Document and Content Access, enables users to connect to almost any external API using natural language instructions. By providing a URL template and relevant parameters within a query, users can retrieve data on demand—even from APIs with dynamic requirements.

As with any agent in the Agent Marketplace, using this agent is just a matter of adding to your team, and customizing the name, type, and persona.

Example:

Suppose you want to access NASA’s Near-Earth Object Web Service, which requires parameters for start_date, end_date, and a user API key (e.g., NASA NEO API). The url and parameters can be entered as a natural language query to this agent:

User: The url is https://api.nasa.gov/neo/rest/v1/feed?start_date=START_DATE&end_date=END_DATE&api_key=KEY. The START_DATE is 2023-09-07 and the END_DATE is 2023-09-08. The value of KEY is YOUR_KEY.

Ben: Here’s a pretty summary of NASA’s Near-Earth Object (NEO) close approaches for September 7–8, 2023.

---

🪐 Overall Summary

- Total NEOs detected: 60

- Dates covered: September 7–8, 2023

- Potentially Hazardous Asteroids (PHAs): 5 (labeled below)

- Closest approach distance: 0.00007 AU (~10,361 km from Earth)

- Smallest object (est. min. diameter): ~0.9 m

- Largest object (est. max. diameter): ~695 m

---

📅 NEOs By Day

September 8, 2023 (31 NEOs detected)

Top highlights:

- Closest Approach:

- (2023 RQ2) — approached at 0.00126 AU (189,304 km) at 06:42 UTC, diameter: ~6–13 m

- Largest Asteroid:

- 340291 (2006 CV) — est. diameter 281–628 m, passed at 0.114 AU (17 million km)

- Potentially Hazardous Asteroids (PHAs):

- 2019 FM2 — est. diameter ~201–449 m, 0.168 AU (25 million km)

- Fastest Relative Velocity:

- (2023 QK1) — 29.09 km/s (104,711 km/h), 0.0586 AU away

- Sentry-monitored (Impact risk monitored):

- (2023 RY15) — 0.00398 AU (595,000 km), sentry monitored, diameter: ~6.7–15 m

[Sentry risk page](http://api.nasa.gov/neo/rest/v1/neo/sentry/54383809?api_key=...)

---

September 7, 2023 (29 NEOs detected)

Top highlights:

- Closest Approach:

- (2023 RS) — 0.00007 AU (10,361 km) at 14:26 UTC, diameter: ~0.9–2 m

- Largest Asteroid:

- (2006 QE) — est. diameter 311–695 m, passed at 0.159 AU (23.7 million km)

- Potentially Hazardous Asteroids (PHAs):

...

Security Note on API Keys

Only use personal or public API keys with any client-side API access if the data is non-sensitive. API keys entered in the team dialog are handled in the browser and are not encrypted or securely stored; never use this method for sensitive information or high-value credentials.

This approach is best for on-the-fly research, prototyping, or data gathering when endpoints and request parameters frequently change.

Conclusion

Sentienta’s flexible API integration unlocks a wide range of use cases for business teams. For example, an agent configured with a static URL can support daily competitor monitoring, environmental alerts, or compliance audits by consistently retrieving the same type of data. Meanwhile, the Ben agent makes it easy to perform ad hoc research, pull market data on demand, or gather custom reports by dynamically querying APIs in response to fast-changing business needs. These capabilities help teams save time, discover new opportunities, and keep information flowing directly into collaborative workflows, empowering better decision-making across projects.